Explainable, Audited, Accountable: Tezi's Anti-Bias Standards for AI in Recruiting

How Tezi's anti-bias system improves hiring quality, fair outcomes, and regulatory compliance for our customers

Bias in hiring didn’t begin with AI. It’s been with us in job descriptions, résumé screens, and interviews for decades. The useful thing AI gives us isn’t perfection; it’s measurement. Done right, AI makes decisions easier to audit, explain, and improve. That’s the bar we set at Tezi.

What Tezi’s AI agents do (and don’t)

Tezi’s AI agents review every inbound applicant according to the employer’s predefined criteria, run a short structured screen (eligibility, location, timing, compensation, etc.), answer candidate questions, and produce transparent, job-related recommendations for the hiring team. Then, the handoff matters: our agents do not make advance/reject decisions. They recommend; people decide. That’s both how we’ve built the product and how I’ve described it publicly. (youtube.com)

The agents are blind by design to protected-class information (e.g., gender, race, ethnicity, profile photos, names). We intentionally engineer away inputs that shouldn’t influence a hiring outcome and focus on evidence tied to a candidate’s track record and skills.

Why “fairness through unawareness”

We chose a blind approach because candidates find it fair and straightforward. In a recent study, people rated hiring algorithms fairest when they didn’t use demographic attributes, and they viewed companies using that approach more positively – making them more willing to apply. This doesn’t mean Tezi ignores outcomes. It means we start with a process that is understandable to candidates and then measure the results carefully.

A fair system can explain itself

A fair hiring system should show its work: which signals mattered, how they were weighed, and why a recommendation was made. Tezi’s AI agents log the job-related evidence behind every recommendation, allowing recruiters and hiring managers to examine the rationale.

By contrast, human decision-making is powerful but not always transparent – even to ourselves. Two recent strands of evidence matter:

Unstructured interviews can seem convincing but may compromise accuracy. Research in Judgment and Decision Making shows we often “make sense” of conversational details that dilute predictive signal, raising confidence while lowering validity. That’s hard to audit after the fact.

Bias appears under pressure. A recent paper found participants were ~30 percentage points more likely to hire workers perceived as White vs. Black, and time pressure amplified discriminatory choices (exactly the environment of rushed résumé screens).

When we pair structured, logged AI recommendations with human judgment, we achieve the best of both: consistency and explainability upfront, along with accountability and context at the decision point.

Evidence that the baseline isn’t fair

Large-scale studies continue to show discrimination in hiring:

A PNAS 26-year-long meta-analysis of U.S. field experiments (1989–2015) found no decline in discrimination against Black applicants over time. The human baseline has problems we can’t wish away.

An employer-level experiment summarized by the University of Chicago’s Becker Friedman Institute graded big firms: the worst-graded favored White over Black applicants by ~24%; the best still showed a smaller but measurable gap.

This is why we focus on design choices (blind inputs, structured screens) and ongoing audits.

Audits, not assurances: what we measure and publish

Our collaboration with Warden AI on The State of AI Bias in Talent Acquisition 2025 echoes what we see internally:

AI vs. human fairness: Warden’s industry data shows AI averages 0.94 on fairness metrics compared to 0.67 for human-only processes, and AI can be up to 45% fairer for women and racial-minority candidates when implemented responsibly.

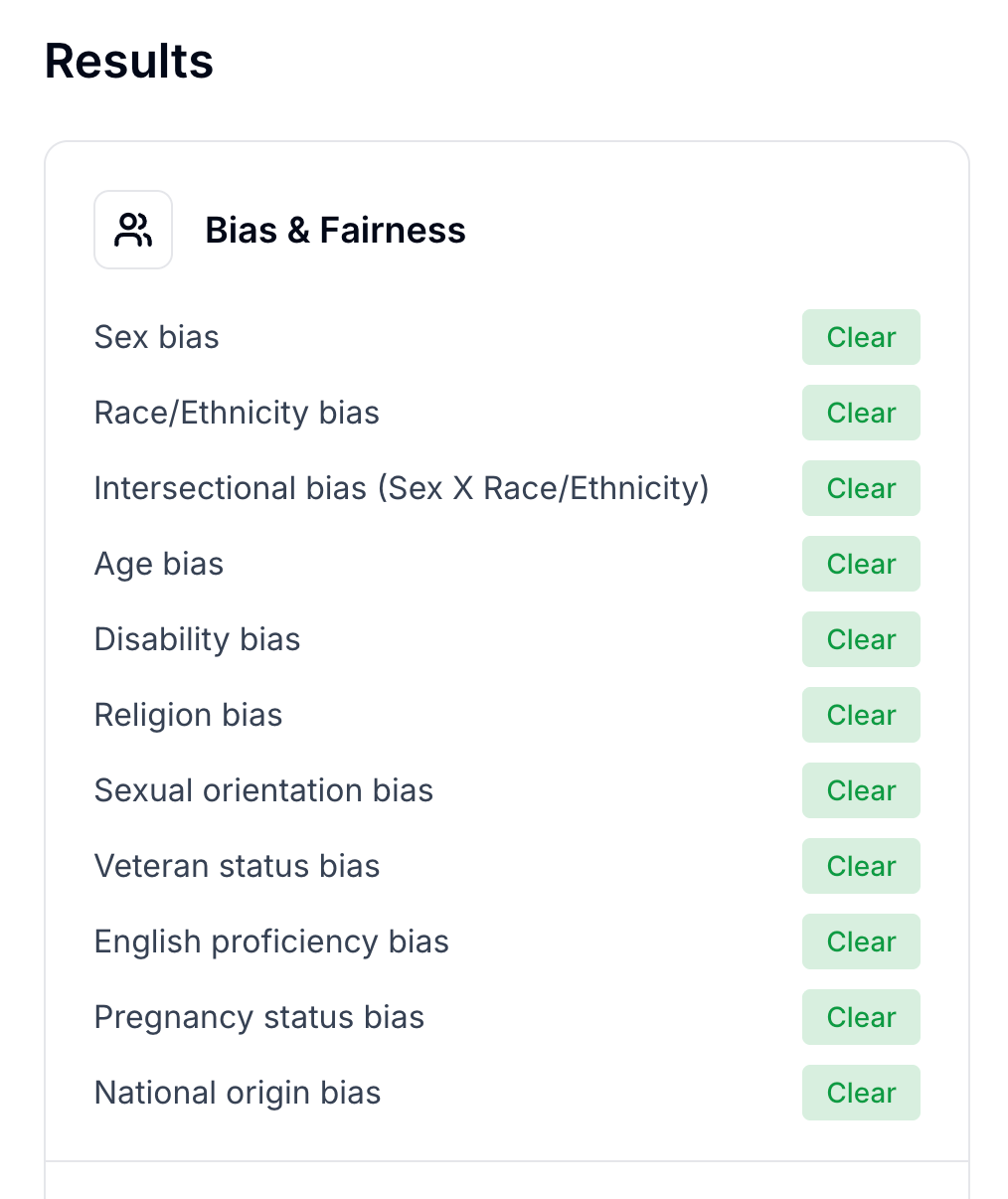

Independent audit: We’ve undergone an external audit under New York City’s Local Law 144, Colorado SB 205, California FEHA, and EU AI Act (Regulation 2024/1689). On the audited use cases (application review & chat screening), Tezi’s system showed no measured bias across any of the eighteen protected class demographics – consistent with how we design and validate. See the details on our AI Audit Center.

Continuous monitoring: We onboarded Warden AI to automatically test our AI agents across eighteen protected-class dimensions every month. Continuous monitoring matters because our product evolves quickly, laws change, and data drifts; recurring audits create a correction loop instead of a one-time certificate.

Put simply, we prefer proof over promises, and we instrument the system so we can continually prove it.

Anti-bias efforts matter for legally compliant hiring

New York City, Colorado, and California are the most consequential U.S. jurisdictions with AI-specific rules touching hiring today:

New York City (Local Law 144): If you use an automated employment decision tool in NYC, you must complete an independent bias audit, publish a summary of results, and give advance notice to candidates. Screening use counts – not just final decisions.

Colorado (SB24-205): The first statewide law covering “high-risk” AI systems, including employment decisions. It imposes duties on developers (Tezi) and deployers (employers) for risk management, impact assessments, monitoring, and reporting, with enforcement under the Consumer Protection Act.

California (FEHA ADS Regulations). Effective October 1, 2025, California’s Civil Rights Council finalized rules under FEHA that expressly regulate Automated-Decision Systems (ADS) in employment. They prohibit discriminatory use of ADS or selection criteria, and expand documentation, record-keeping, and accommodation duties – creating one of the most comprehensive state frameworks for AI in hiring.

Designing to these standards – and monitoring continuously – means we’re not just compliant in one jurisdiction; we’re building durable governance for how AI is used in hiring.

What we commit to

Blind by default. Tezi’s agents exclude protected-class inputs and obvious proxies where possible.

Human in the loop. Agents recommend; people decide.

Explainable outputs. Every recommendation includes the job-related evidence and rationale.

Independent, ongoing audits. 3rd-party audits every month across eighteen protected classes.

Regulatory compliance. We designed Tezi to reduce bias from the onset, which enabled us to meet the NYC, CO, and CA requirements with ease.

The outcome we’re after

Better hiring isn’t only about quality and speed; it’s also about trust. Candidates engage when they know they’ll be evaluated on job-related merit. Recruiters and hiring managers make stronger decisions when they can see the reasoning, compare it across groups, and course-correct with data.

That’s why we design Tezi’s AI agents to be explainable, audited, and accountable – and why we measure fairness as an ongoing practice, not a one-time claim.

If you want to see how this works on your roles – especially if you’re sitting on an overwhelming pile of applications – get in touch. Our team would love to give you a demo.